The narrative around OpenAI begins to seem increasingly familiar to us: a company that comes from the technological forefront, that attracts enormous investments, that attracts global talent, that becomes indispensable to its partners and that raises maximum expectations while at the same time accumulates enormous and growing costs.

All this in a context where technology is not just a toolbut a vector of power, influence and dependence: this time it is not simply about building a revolutionary platform, but about asking whether we are facing a repeat of the classic Big Tech script, and understanding why this is disturbing.

Since its origins, OpenAI adopted a hybrid strategy: Exploring what is disruptive and scaling it as a service, while establishing alliances with giants like Microsoft. This combination allowed it to position itself as a central player in the artificial intelligence ecosystem, with valuations that quickly reached stratospheric values, with strategic agreements and an almost structural role in cloud strategy, foundational models and customer service.

But what seems like a meteoric success is also a latent risk: reaching a point where the decline is so traumatic for the economy and the sector that its survival becomes a “too big to fail”.

This term, which has already been used in several analyzes of this industry, reveals that the problem is not just financial, but strategic and systemic: when a company becomes essential, with deep connections in infrastructure, talent, data and capital, its destiny no longer depends exclusively on its market; You become dependent on your network, your partners, your ability to support the weight of your huge bets.

If OpenAI fails, it could precipitate a chain of consequences that affect those who rely on its models.

OpenAI aspires to be a protagonist in the future generation of artificial general intelligenceand his movements suggest that he thinks on a scale that transcends the product to try to assume a structural role.

But this scale comes with increasing costs. Massive investments in data centers, specialized chips, exclusive talent and strategic collaboration with other corporations that brutally increase barriers to entry. The paradox is that the more indispensable a company becomes to an ecosystem, the more vulnerable they will be to their own mistakes that will turn into systemic crises.

If OpenAI fails, it could precipitate a chain of consequences that affect those who depend on its models, its infrastructure, or its alliances.

Another worrying characteristic is the conjunction of two dynamics: on the one hand, the monstrous spending that large technology companies make on artificial intelligence (data centers, chips, cloud services, etc.) and, on the other, the difficulty of transforming these expenses into clearly increasing income or products that justify short-term investment.

This tension is heightened when OpenAI and other actors assume that success is not simply in they offer functionality, but rather dominate a platform logic: standardized models, mass distribution, cloud dependency, and monetization through expanded ecosystems. It is exactly the structure that defined the giants of the past.

OpenAI’s ambition could be a driving force for the industry, for the adoption of artificial intelligence and for the profound (and inevitable) change of many economies

The fundamental question is whether we are facing a new cycle of concentration of technological power, of closed ecosystems in which those who board the train commit to a dependence that now seems inevitable. OpenAI thus becomes a catalyst and a warning: its success can generate enormous positive externalities, but it also leads to dynamics of imprisonmentof increasing barriers, of high systemic risk.

And we already know these dynamics: slowdown in competition, accumulated market power, side effects on innovation and risk of regulatory capture.

Not everything is lost: this time the context is different, with greater public sensitivity, migration of the debate towards technological sovereignty, real regulatory concern and a growing awareness that the giants of tomorrow can require mechanisms different from yesterday.

In this scenario, OpenAI’s ambition can be a driving force for the industry, for the adoption of artificial intelligence and for the profound (and inevitable) change of many economies. But it also requires us to look at how their business relationships, their revenue model, its responsibility towards the system and its capacity for decentralization.

Why the biggest risk is not just that OpenAI will dominate artificial intelligence: is that, in doing so, it reproduces the pattern we already know of hegemonic companies, proprietary infrastructures, dependence on customers and developers, investments that only justify long-term returns and an ecosystem that adjusts to its logic instead of being configured in a plural way.

Instead of opening artificial intelligence to the world, we could be accepting another technological domain, with a hierarchy that favors those already in it.

What’s at stake is not just how big OpenAI will get, but how dependent we will become on it. Why technology is not neutral and the structures it builds shape the future of industry, employment and society.

If OpenAI follows the full Big Tech roadmap, Europe and Spain will not be able to simply regulate it: they will have to ensure that they compete, innovate and diversify. This artificial intelligence is not just controlled, but distributed. That there is not a single dominator who enforce standards, business models and dependency models.

OpenAI could be the spark of a new cycle of innovation or the symbol of an accumulated power that is difficult to neutralize. The important thing is that we see him as he really is: not just a start spectacular that has been read from top to bottom in the Silicon Valley cookbook, but a potential architecture of tomorrow.

And when what is at risk is the way in which the artificial intelligence industry is built, it is not enough to admire the novelty: it is necessary to anticipate the dynamics, understand the alliances, question the models and decide what future we want. Because, as in the history of technological giants, what seems inevitable today may become irreversible tomorrow.

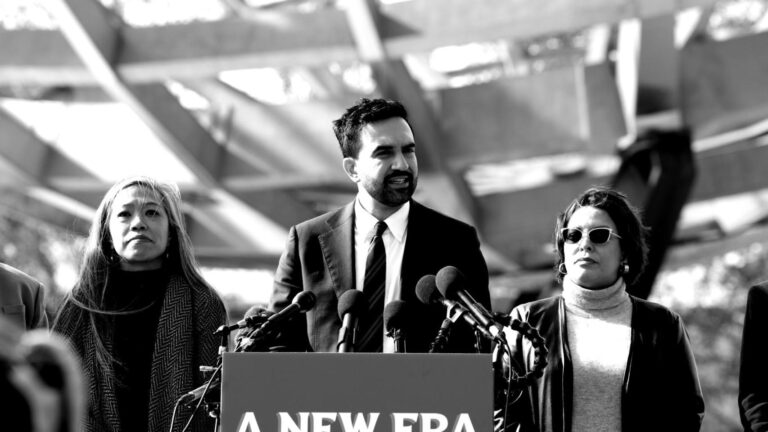

***Enrique Dans is a professor of Innovation at IE University.